Part 1 Covers definitions and background

Part 2 Gives an example of rain at the border between Light Rain and Moderate Rain

We now have a tipping bucket rain gauge and can be more specific in the study. Our goal is to teach a practical interpretation of rain intensity that will help with our own observations as well as maybe assist the VOS program once we get a good database established.

The original motivation came from a long-distance outdoor skate (The Red Hook Haul Ash... can be done bike or skates.) During the last event it was raining at just 0.04"/hr and we got soaked, and it seemed that such a light rain would not do that... etc. But it turns out that remarkably low inches per hour is really a lot of rain. What is called "heavy rain" would better be called amazingly heavy rain, etc. See earlier article on terms.

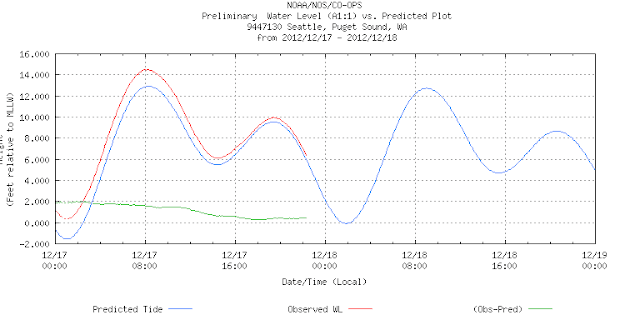

We spent Christmas with a nice steady 0.10 to 0.13 inches per hour of real rain. That is, it was not showers, which would mean it could be different a few blocks from here. Thus, besides our own gauge, we have other data from the neighborhood that supports the intensity. Namely the roof top gauge at UW and the Seattle RainWatch program, another UW product. Picture below is from UW roof.

This is accumulated rain, so the steady slope means it was constant, and if you divide 0.45" by 4 hours you get the 0.11 or so we experienced here, 4 miles away.

Below is a capture done too late that shows the screen from Seattle Rain Watch. To use this neat site, set the picture to "1 hr pcp" and choose "local metro." This one shows lighter gray for under 0.10, but at the time of our test it was dark gray, meaning solid at or over 0.10, or higher, but we did have our tests and US data to show it was really 0.10 to 0.13. They have animations showing the rain moving, and forecasts.

Now for the data:

Here is what 0.10 to 0.13 looks like in a puddle.

Next, we see what this looks like on the water, from a distance off. Notice that it barely shows, and has only a slight influence on visibility.

Finally we look at 0.10" per hour on a windscreen with windshield wipers. We include this example because mariners of ships and power-driven vessels often use rain on the windscreen as a gauge of intensity for reporting rain fall intensity. Our goal is to build up a data base of this type of video for various rain intensities. This will take a while, but we will plod on with it as best we can.

End of story. The main point to make is even what appears to be significant rain is actually still a small number, ie 0.10 inches per hour. Put the other way, 0.50 inches per hour is a real downpour. Now that we have our neat gauge, we will get some data to demonstrate this.

By the way, the folks who have really studied this subject are those who have invented automatic windshield wipers. They need multiple ways to decide how often to wipe the screen. I just learned that their patent apps have many interesting graphs of their studies, using read values of inches per hour intensity, so we will try to sort that out and include some here with this.

We spent Christmas with a nice steady 0.10 to 0.13 inches per hour of real rain. That is, it was not showers, which would mean it could be different a few blocks from here. Thus, besides our own gauge, we have other data from the neighborhood that supports the intensity. Namely the roof top gauge at UW and the Seattle RainWatch program, another UW product. Picture below is from UW roof.

This is accumulated rain, so the steady slope means it was constant, and if you divide 0.45" by 4 hours you get the 0.11 or so we experienced here, 4 miles away.

Below is a capture done too late that shows the screen from Seattle Rain Watch. To use this neat site, set the picture to "1 hr pcp" and choose "local metro." This one shows lighter gray for under 0.10, but at the time of our test it was dark gray, meaning solid at or over 0.10, or higher, but we did have our tests and US data to show it was really 0.10 to 0.13. They have animations showing the rain moving, and forecasts.

Now for the data:

Here is what 0.10 to 0.13 looks like in a puddle.

Next, we see what this looks like on the water, from a distance off. Notice that it barely shows, and has only a slight influence on visibility.

Notice the difference between the car (vessel) underway and stopped with regard to apparent rain intensity. Moving at higher speeds you get more rain on the windows. This car was moving at about 12 kts, maybe a bit faster at times, which is compared to stopped twice.

End of story. The main point to make is even what appears to be significant rain is actually still a small number, ie 0.10 inches per hour. Put the other way, 0.50 inches per hour is a real downpour. Now that we have our neat gauge, we will get some data to demonstrate this.

By the way, the folks who have really studied this subject are those who have invented automatic windshield wipers. They need multiple ways to decide how often to wipe the screen. I just learned that their patent apps have many interesting graphs of their studies, using read values of inches per hour intensity, so we will try to sort that out and include some here with this.